Robots Exclusion Checker

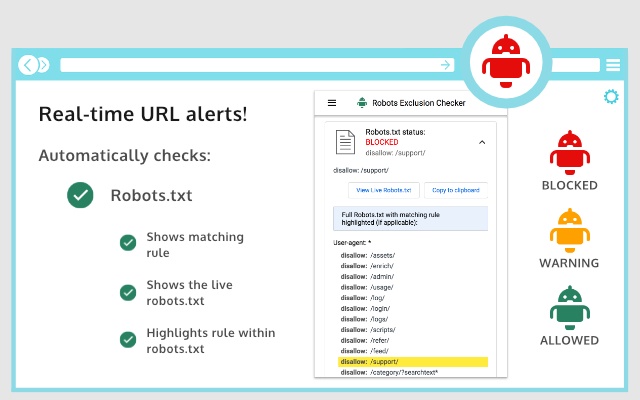

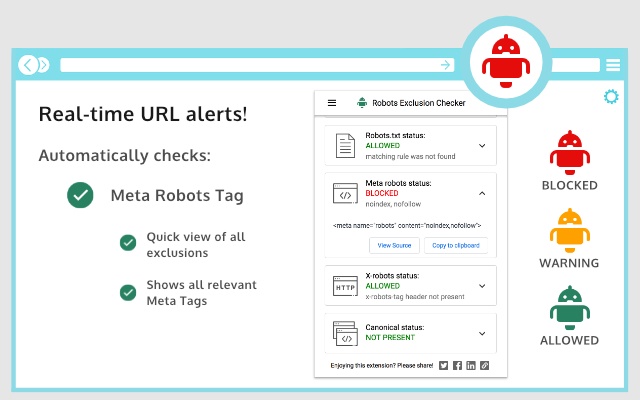

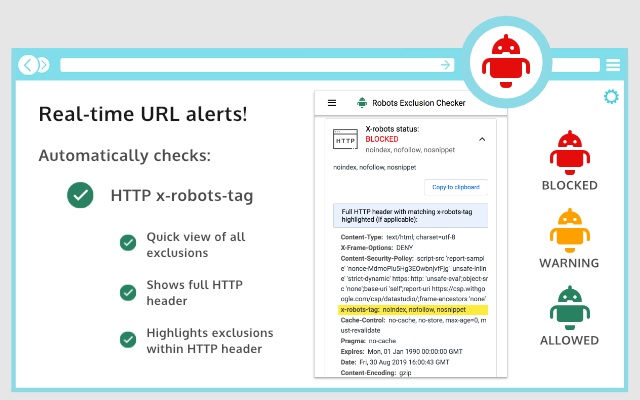

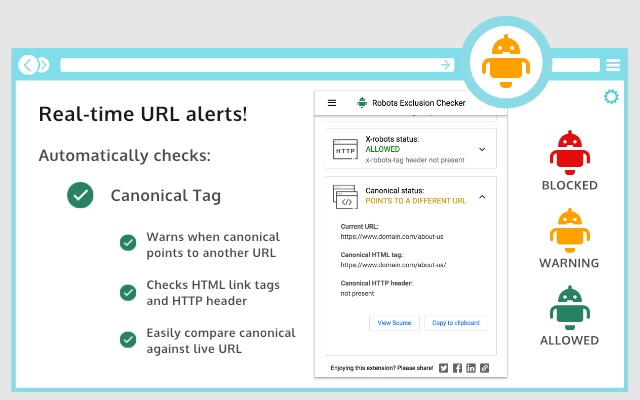

Robots Exclusion Checker is designed to visually indicate whether any robots exclusions are preventing your page from being crawled or indexed by Search Engines.

You can view this extension in Chrome Web Store here: Robots Exclusion Checker

About Sam Gipson

Sam Gipson is a SEO consultant from London. He contacted me with an idea for a chrome extension that would solve a common problem in his field of Search Engine Optimization. You can learn more about him on his website: Sam Gipson - London SEO Consultant & Search Engine Specialist.

About search engine crawlers

In the SEO world the apps that crawl the internet, read articles and content are called "robots" or "crawlers". Examples of these are Google crawler or Bing crawler. Web developers can specify to these robots which content can be crawled and indexed, and which pages should be ignored by specifying a special robots.txt file and by HTTP headers or by HTML tags.

The problem

It is very important to keep track and debug the robots.txt file, HTTP headeres and HTML tags, to make sure that Google crawler is allowed to index the correct pages and is prevented from scrape forbidden content. To check this informating you would have to manually open the robots.txt file, check the HTML source of the page and the response headers.

The solution

That problem could be solved with a chrome extension. Together with Sam we developed a plugin that takes care of all the manual tasks regarding debugging and verifying all the robots information is corrent. He provided his experience and knowledge in the SEO area, and I was able to translate that into technical and working chrome extension.

Chrome extensions and automated testing

The extension had to work with all the websites and all possible combinations of robots.txt / headers / meta tags. Checking and verifying that every case remained working after a change would be very tedious. This is a perfect example of a project where automated testing was invaluable and insturmental.

Conclusion

I liked working on this project a lot. I learned a lot about SEO and details of search engine crawlers. I also learned the power of collaboration and working with somebody who is an expert in his field :)